Let’s say you own an apparel brand that sends an email round-up of the new arrivals every Friday. The usual subject line for that email is “This week’s new arrivals!”

But now, the marketing department suggests trying a different subject line: “Check out these new arrivals!”

You collectively decide to divide the company’s email list and send emails with both subject lines to subscribers. One half will receive the original, and the other will receive the subject line variation.

After a while, your company learns that the email variation containing the new subject line is 5% more likely to convert than the previous version—so you change the subject line for all emails for this campaign to the one that performed better.

That process is called A/B testing. It helps you experiment with different components of your marketing email and find which variation performs best for your brand.

Let’s take a closer look at the email A/B testing parameters, best practices, and how to use Klaviyo to A/B test your email marketing campaigns so you can drive more revenue through your existing email campaigns and automations.

What is A/B testing in email marketing?

A/B testing (or split testing), is a method used to test variations of your emails, landing pages, SMS messages, and email sign-up forms. A/B testing can be used to optimise various email elements to gain insights into what resonates best with your audience and make data-driven decisions to improve the effectiveness of their email campaigns.

Sending two variations of your welcome email, one with only text and the other with text and images, is an example of A/B testing for email marketing.

A/B testing terminology

Email marketers use the following technical terms to describe A/B testing and its results:

- Variable: The element whose value you are changing in A/B testing. For example, if you test red and blue call-to-action (CTA) buttons, the colour of the CTA button is the variable.

- Control: The original value of the variable. For example, if you usually use a blue CTA button and now want to test a red button, blue is the control.

- Control group: The group of subscribers to whom you send the control. In our example, the control group is the people who received the email with the blue CTA button.

- Variation(s): The new versions of emails you create by changing the value of your test variable. In our example, the email with the red button is one variation. You may try any number of variations, but it’s good practice to limit your test to 4 variations.

- Winner: The variation that produces the best values for the performance metrics.

With the basic definitions out of the way, let’s look at how to perform email A/B testing and how it helps improve your email marketing efforts.

Significance of email A/B testing

A/B testing helps you experiment with email marketing ideas. It gives you data-backed results and eliminates the need for guesswork. In A/B testing, you test only one variable at a time, which gives you clarity on how each element in your email influences its performance.

According to the Ariyh newsletter, startups that regularly A/B test receive 10% more webpage visits in the first months. Engagement also increases 30-100% after a year. Even a slight tweak like changing the colour of your CTA button may result in a performance improvement. Skipping A/B testing is as bad as leaving money on the table.

What do you test for in email A/B testing?

- Email “from name”

- Email subject line

- Layout and elements of email

- Email copy

- CTA

- Send time

Email A/B testing is straightforward if you approach it systematically. Let’s dive into what the tests for each of these areas entail.

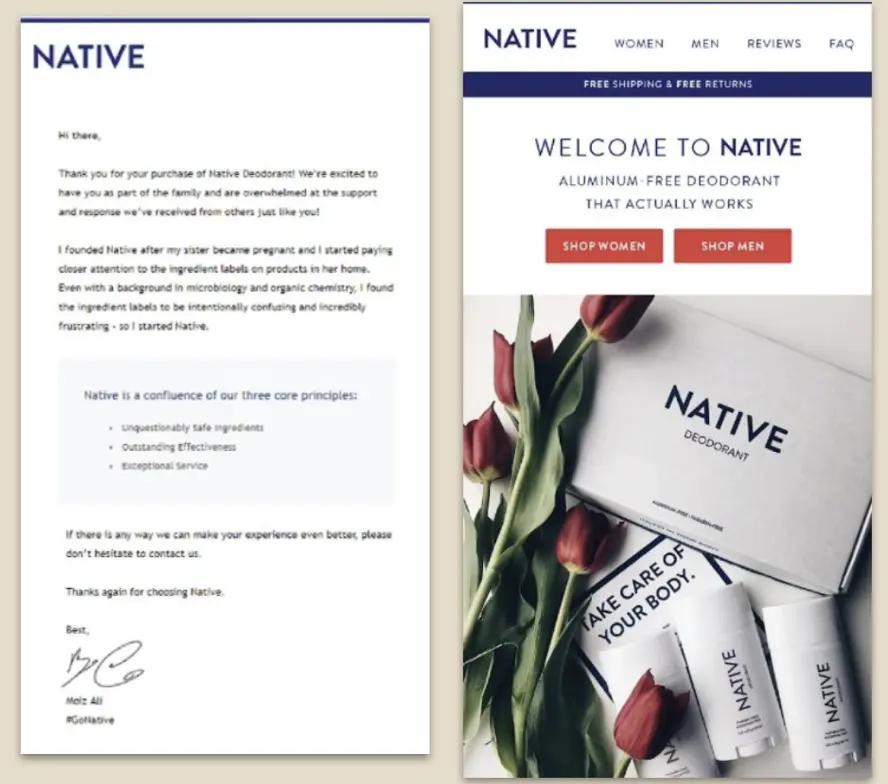

1. From name

The “from name” is the sender’s name that appears in an email’s inbox preview. It informs the recipient of who the sender is.

As you can see, organisations can use different “from names” for their marketing emails.

The “from name” influences how a recipient responds to an email.

Here are a few variations of the “from name” worth testing:

- Your organisation’s name

- Sender’s name along with company name

- Just a person’s name if they have a strong personal brand

- A department of your organisation (for example, “Klaviyo Research”)

Tip: Don’t use ambiguous “from names.” Make it easy for the recipient to know the email is from your organisation.

2. Email subject line

The subject line is the first thing a person notices about an email—so much so that 47% of recipients open emails based on the subject line.

An email subject line that engages the recipient can improve open rates. Here are a few aspects to consider while A/B testing email subject lines.

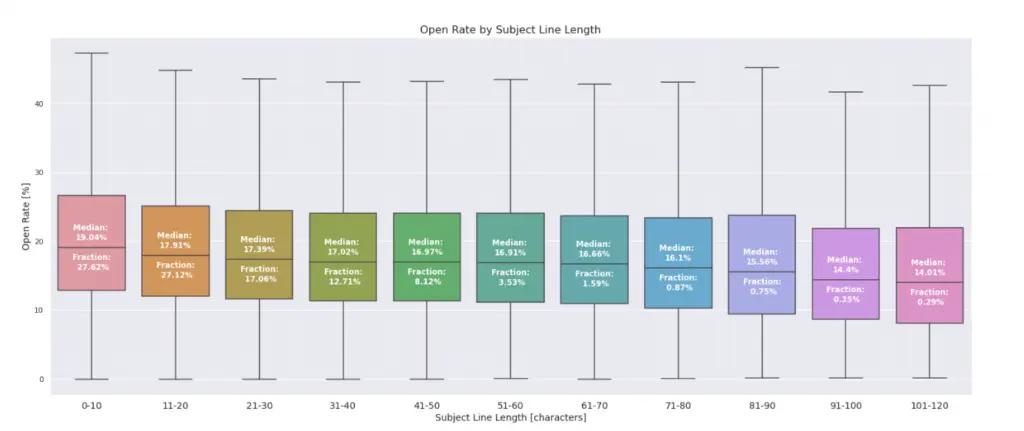

Length

Our research shows that subject line length and email open rates are inversely proportional: the longer the subject line, the fewer people will open it. The optimal length may differ for your audience, so try varying the subject line’s length by a few characters and measuring the email’s performance.

Note: While A/B testing variations of the subject line, don’t forget about mobile device users. Mobile devices only support subject lines with 30-40 characters.

“Oftentimes, a subject line that reads well on desktop may get cut off in mobile inboxes, so it’s always a good idea to view your subject lines on both desktop and mobile during your testing process. Use shorter copy to ensure your enticing subject line doesn’t lose its effect on mobile,” advises Cassie Benjamin, email/SMS channel manager, Tadpull.

Format and personalisation

The format of your subject line can have a significant impact on the recipient. For instance, we found that subject lines without fully capitalised words performed 12% better than subject lines with all caps.

Here are a few formatting ideas for your email subject lines:

- Using one or more punctuations in the subject line

- Ordering words hierarchically (for example, “30% off on your favourite products!” vs. “Shop for your favourite products at 30% off!”

You can also try personalising subject lines using the recipient’s first name.

Tip: Using punctuation or emojis to make your subject line stand out is fine, but don’t overdo it. Something as simple as using consecutive punctuation marks can reduce the effectiveness of your emails by 19%.

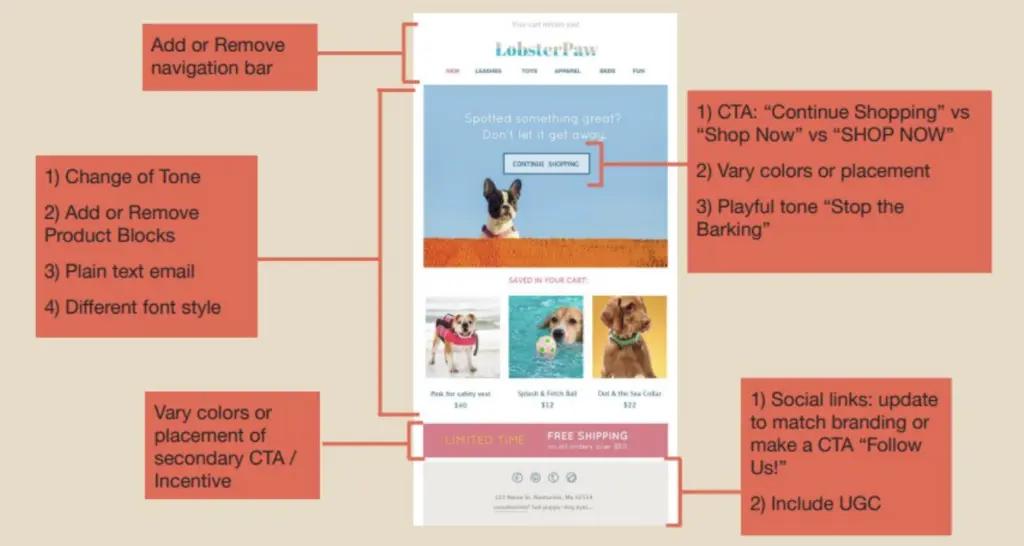

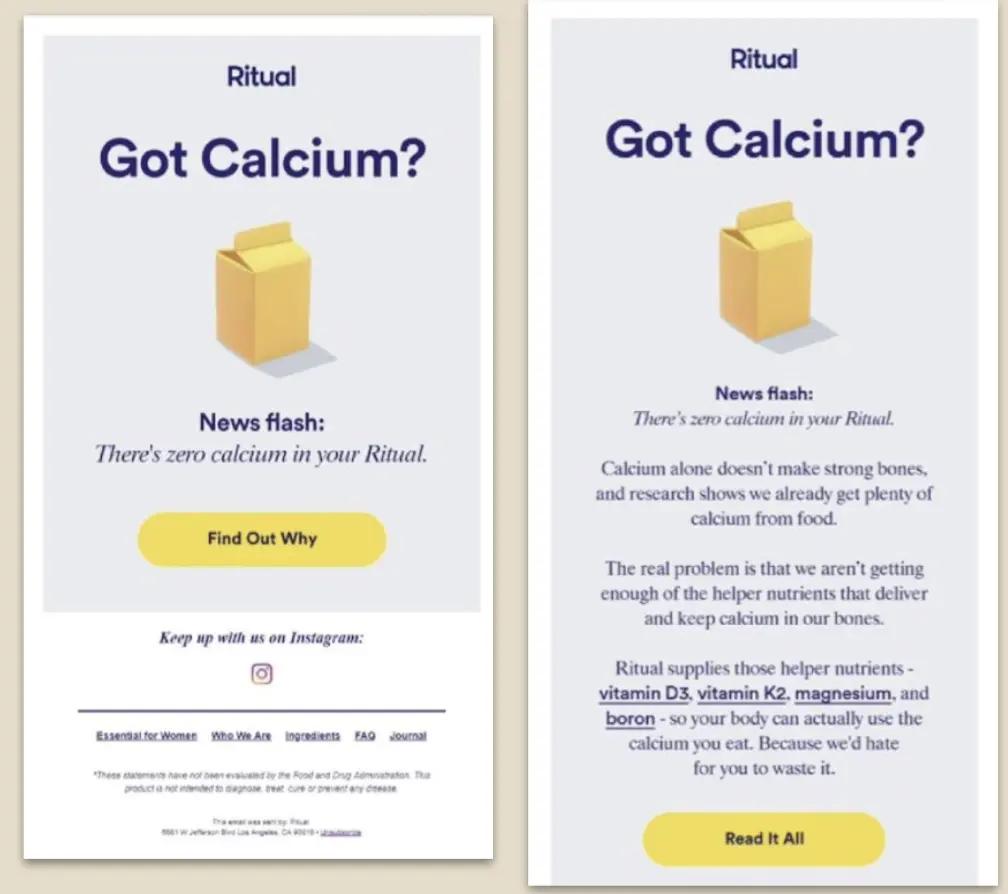

3. Layout and email elements

Marketing emails often contain text, images, videos, gifs, and mixed media. You can A/B test your emails by tweaking these email elements.

Here are a few A/B test options:

- Images only vs. text only

- Variations of hero images (for example, with people vs. without people)

- Image formats (gifs vs. static images)

- Videos vs. infographics

- Social proof vs. no social proof

- Different types of offers

“You can test 10% off, free shipping, and similar and see what offer drives the highest amount of conversions. You can even split test what email in your flow you introduce the offer in. Remember, when making an offer in your abandoned cart email sequence, adding scarcity in time or quantity will help entice your customers to finish their order,” says Toccara Karizma, CEO, Karizma Marketing.

You can also play with the overall layout, placing components of email design like the headline, tagline, descriptions, text, visuals, and CTA in different places. The idea is learning, which makes for better readability and, in turn, user experience.

Here are various email layouts you can use for A/B tests:

- Single column layout

- Multi-column layout

- Image grids

- F-pattern layout

- Zig-zag layout

You could also A/B test pre-built email templates offered by your email marketing provider.

Tip: Ensure your email has a responsive design to render on all screen sizes. Think about mobile-friendly layouts when you A/B test.

4. Email copy

A/B test between a minimalistic copy supported by images and a detailed email copy. Statista reports that a person only spends 10 seconds on a marketing email. Compact email copy helps make the most of that time.

That doesn’t mean shorter copy always wins, though. You might want to test longer email copy if you wish to:

- Share your brand’s story or values

- Show how to use your products

- Explain a policy or a process change

You can also A/B test the tone of your email. Try using casual, fun-to-read email copy rather than formal copy. Also, test whether emojis work for your emails.

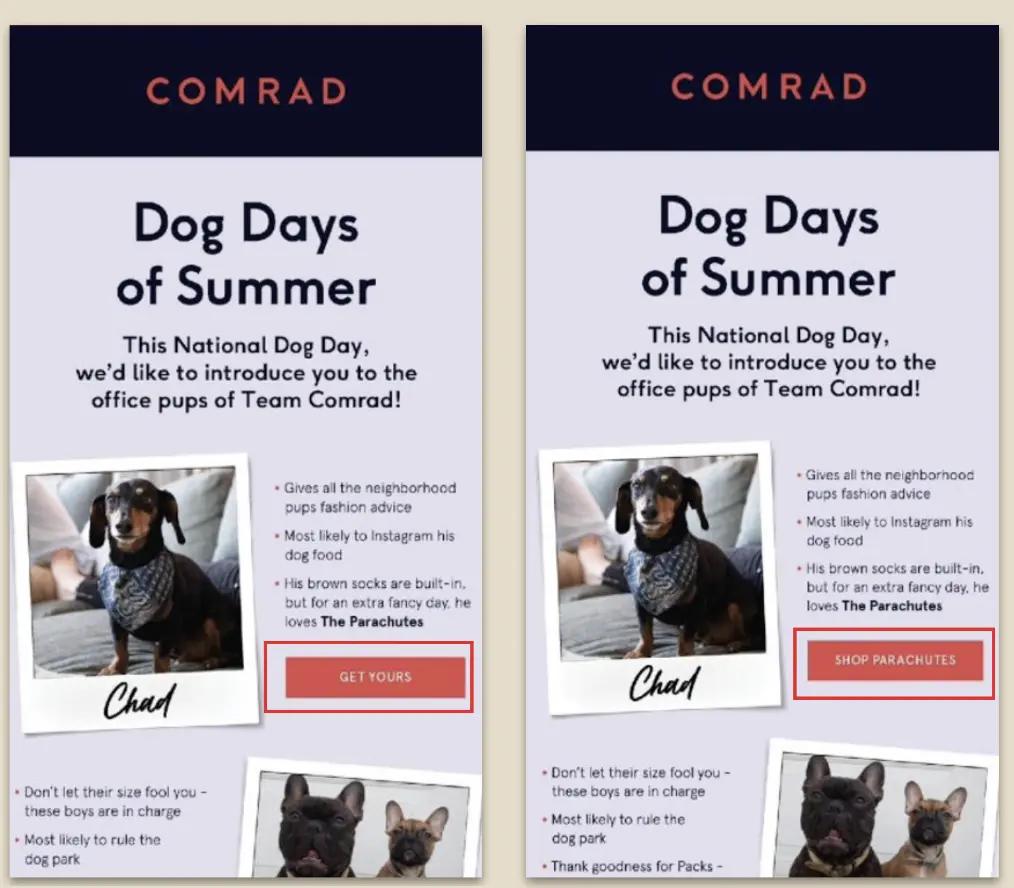

5. Calls to action

Calls to action (CTAs) are a crucial element in marketing emails. If done well, they can drive traffic and result in sales.

With that in mind, here are a few CTA attributes you may want to A/B test.

Number and placement of CTAs

A prominent CTA helps capture the subscriber’s attention. However, A/B testing with multiple CTAs is worth it if:

- Your email is too long, and the recipient has to scroll to find the CTA.

- You are highlighting more than one product.

- You have an ongoing sale with several offers.

You can also test the CTA’s placement. For instance:

- Above the fold vs. below the fold

- After all your images vs. sprinkled between the images

CTA hyperlinks vs. CTA buttons

Using hyperlinks as your CTA can help your email appear more casual. You can just drop the link without breaking the copy’s overall flow.

Conversely, you can use a CTA button that’s easy to locate and attracts the recipient’s attention. That doesn’t mean your button can’t be discrete and fit well with the copy—just look at many of the email examples we’ve shown you here.

CTA colour, copy, and length

Colours can evoke specific emotions in your recipients. For example, yellow and red evoke hunger, which is why many fast food chains use these colours.

In that sense, it’s an excellent idea to A/B test different colours for your CTA—whether guided by psychology or the desire to match your brand’s style.

A/B testing the copy and length of your CTA will help you determine what works the best for you. For instance, you can try short, action-oriented CTAs like “claim the offer” vs. conversational CTA copy like “read more.”

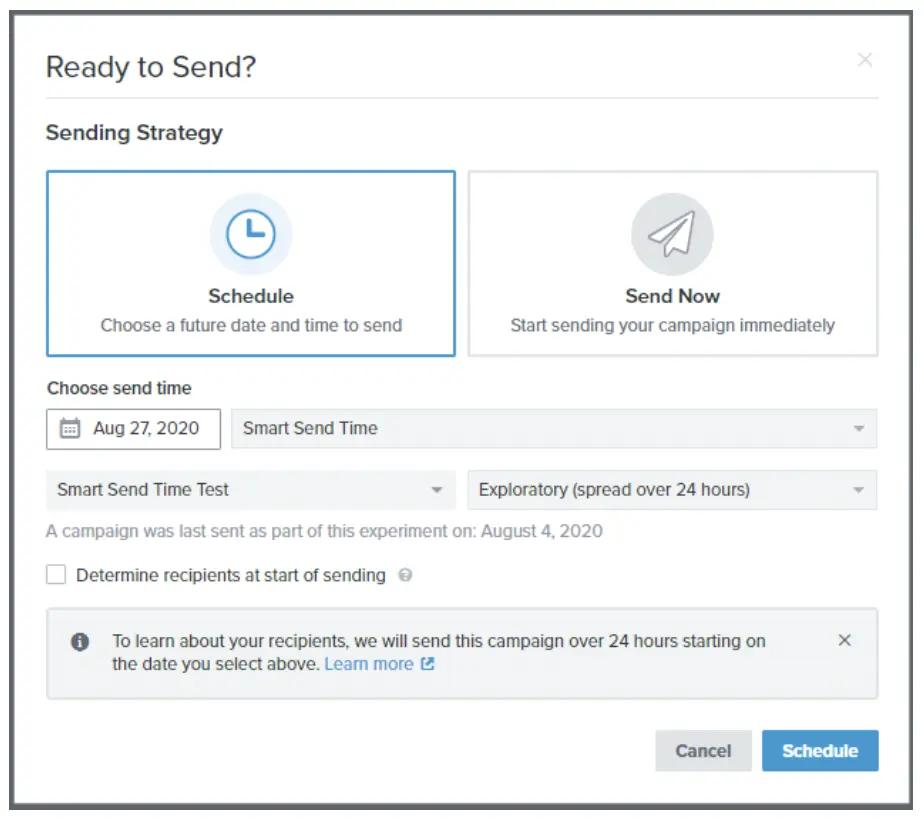

6. Send time

While it’s true that emails sent on working days get more engagement, you need to find the day and time that works best for you. A/B testing your send time will help you get there.

You can also use tools to aid your tests. For instance, Klaviyo’s smart send time feature suggests the best send times based on your recipient’s email engagement patterns.

“A/B testing send times is one of the most underrated strategies I see with brands. Just by testing morning sends vs. night sends, you might see an extraordinary difference in conversions,” says Brandon Matis, owner, Luxor Marketing.

How do you measure A/B testing success?

Before running an A/B test, you need to decide how you’ll determine the winner. To that end, you should choose a performance metric based on the A/B test variable. For example:

- Open rate: if you’re testing “from name,” subject line, or preview text

- Click rate: if you’re testing CTA variations, layout, or email copy

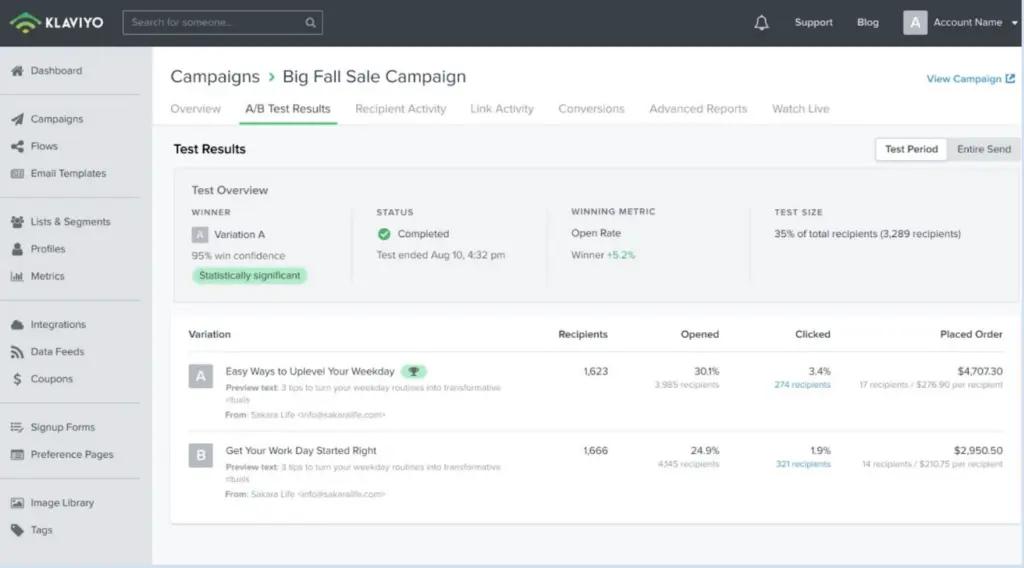

Email marketing platforms like Klaviyo help you set up and collect the results of A/B tests. But after you receive the results, how do you interpret them?

Assume you A/B tested two email layouts. Version A gave you a 15% click rate, whereas version B resulted in a 14% click rate. Does it mean version A is the winner?

A/B testing is a method of statistical inference where you arrive at a result by the behaviour of a sample. That means you need to make sure the results are statistically significant. Klaviyo helps you mathematically determine the reliability of your A/B test results.

Based on statistical significance, Klaviyo categorises A/B test results into 4 groups:

- Statistically significant: Klaviyo classifies an A/B test result as statistically significant when it has a high chance of winning over the other variations in the future. In other words, a statistically significant A/B test win means that the winning email will consistently get more engagement if you repeat the testing.

- Promising: If the test result is promising, it means the winning version has a chance of getting more engagement in the future, but you may need to run more A/B tests to make sure.

- Not statistically significant: Klaviyo categorises a test result as not statistically significant when the winning variation is slightly better than the others. This means you might not be able to reproduce the same results if you repeat the A/B test.

- Inconclusive: If there is not enough information to conclude the test, Klaviyo categorises it as inconclusive. It also means the A/B testing didn’t get enough responses to measure the results. In that case, you may want to expand your recipient pool or follow up with more tests.

Email A/B testing best practices

It’s best to follow a set of guidelines and principles with each test to get the most of your A/B testing program. Here are some go-to practices that will help you have successful tests moving forward:

- Develop a hypothesis

- Use a large sample

- Test simultaneously

- Test high-impact, low-effort elements first

- Prioritise the emails you send the most

- Wait enough time before evaluating performance

- Test a single variable and no more than 4 variations at a time

- Use A/B testing tools

Develop a hypothesis

Before choosing what variables to test, you need to create a hypothesis based on what you’re trying to achieve with your A/B testing.

A/B testing usually occurs when a team’s marketing strategy is going well, but they want to fine-tune their communication with customers..

So, you may developer hypothesis like:

- A subject line with an emoji will perform better than one without

- An email coming some a person at our company versus from our company name itself will perform better

- An email with clearer CTAs higher in the email will perform better

- Emails in a specific sequence will perform better if they have more images

Based on the hypothesis, you must choose the email elements to test. If you already have a successful marketing campaign, you can start by testing micro-elements like:

- CTA button colour

- Preview text

- Subject line length

By contrast, if you want to make a major difference to your marketing, test more significant elements like email layout, images, and brand voice.

Use a large sample

A/B testing is a statistical experiment where you derive insight from the response of a group of people. The larger the sample size of the study, the more accurate the results.

We recommend sending each version of your email to at least 1K recipients. Sending to a smaller group may result in statistically insignificant or inconclusive results.

Test simultaneously

Send time plays a crucial role in how a recipient responds to an email. For example, if you send one variation on a weekday and another on the weekend, timing will skew the results.

Unless the variable you’re testing is send time, it’s best to send both the control and variation simultaneously. That will help eliminate time-based factors, ensuring you get the best results possible.

Test high-impact, low-effort elements first

You can test many elements—and you might not have enough resources to test them all. Also, running many A/B tests at a time might prove confusing.

Therefore, it’s a good practice to start with high-impact elements that take less time to test. For instance:

- Subject line

- CTA copy

- Email layout

Once you’re done with the low-effort elements, you can test high-effort ones.

Prioritise the emails you send the most

Invest your A/B testing efforts into the activities that yield the most profit. Start with perfecting the emails you frequently send, such as:

- Weekly newsletters

- Promotional or offer emails

- Welcome emails

Targeting these emails ensures you reach many recipients and get a better return on investment on your A/B testing efforts.

Wait enough time before evaluating performance

Even if you have sent an email to a large group of people, they might not engage with it immediately.

Most people take time to get around their inbox, so make sure you wait long enough for your recipients to engage with the email. With Klaviyo, you can set the test duration, after which the winner will be declared.

Test a single variable and no more than 4 variations at a time

You may have many ideas for A/B testing your emails, but it’s best to test one variable at a time. Testing more than one variable simultaneously makes it difficult to attribute results.

For example, let’s say you change the CTA button colour and copy simultaneously. If you see an increase in the click rates, it will be challenging to determine whether the colour or the copy impacted the results.

We recommend testing up to 4 variations at a time. You’ll need a larger sample to test more variations accurately.

Use A/B email testing tools

It’s challenging to scale up manual A/B testing. If you choose an email marketing platform like Klaviyo, you can easily set up A/B testing and observe your results.

On that note, let’s explore how Klaviyo elevates your A/B testing experience.

How to A/B test email flows and campaigns using Klaviyo

Manually testing your emails might take a lot of time, and capturing the results can also prove challenging. But that doesn’t have to be the case.

Here’s how you can start A/B testing email campaigns and email flows with Klaviyo.

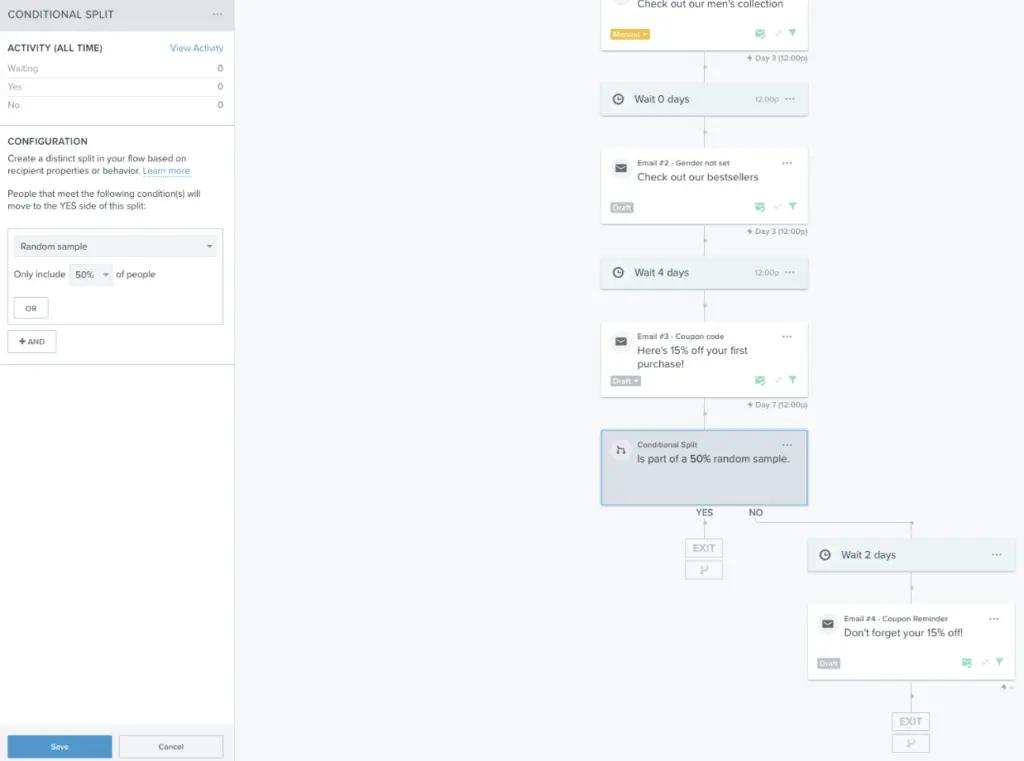

A/B testing automated email flows with Klaviyo

Email flows, also known as email automation, are automated emails that send when customers perform an action. For example, when a customer abandons a shopping cart, Klaviyo can trigger an email flow that reminds the customer to complete their purchase.

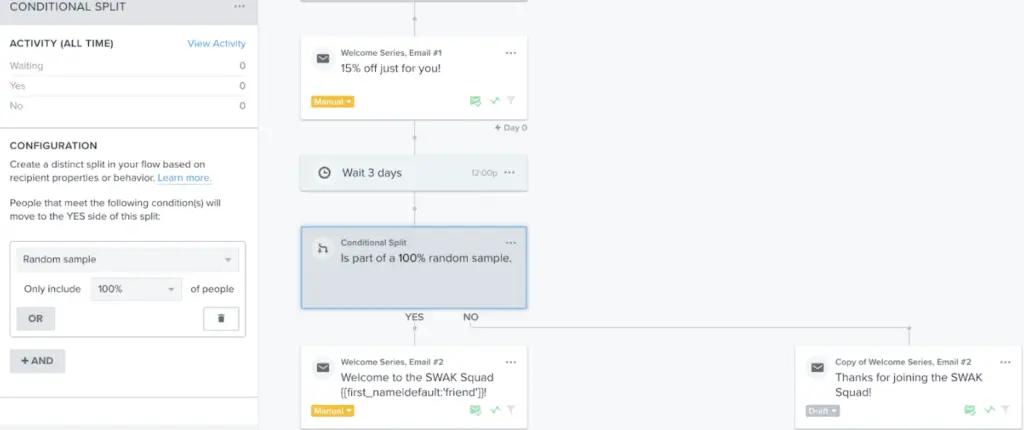

You can use Klaviyo’s conditional split feature to A/B test an email flow. Here are the steps:

- Step 1: Navigate to the email flow that you want to test.

- Step 2: Add a conditional split with the condition as “random sample.”

Source: Klaviyo

- Step 3: Select the percentage of recipients who’ll go down the YES path (the control group).

- Step 4: Create a test branch with one or more variations in the email.

- Step 5: To end the conditional split, set the percentage of recipients going down the YES path to 100%.

A/B testing email campaigns with Klaviyo

An email campaign is a one-time email sent to a specific audience at a predetermined time. Sales promotions and newsletters are examples of email campaigns.

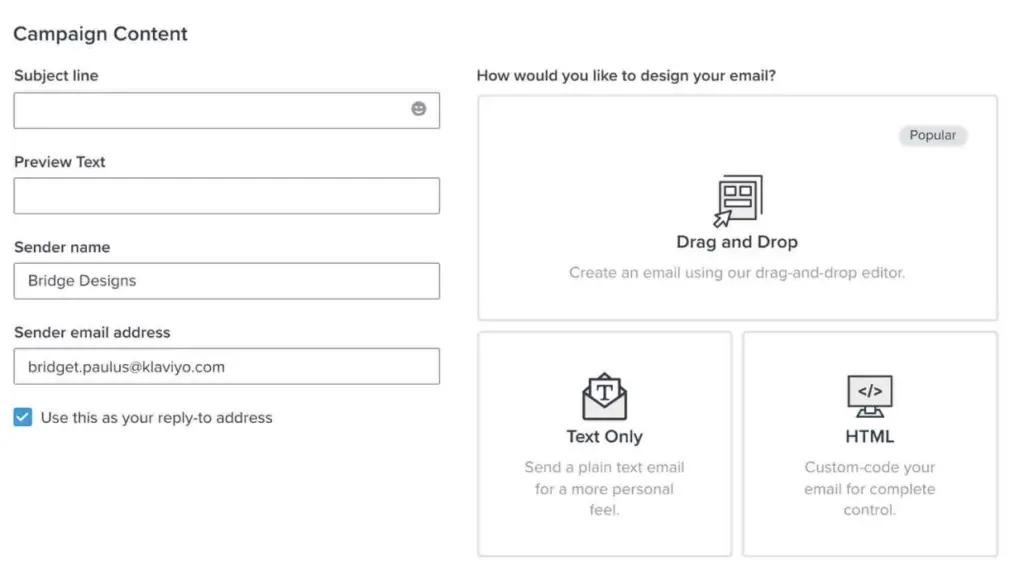

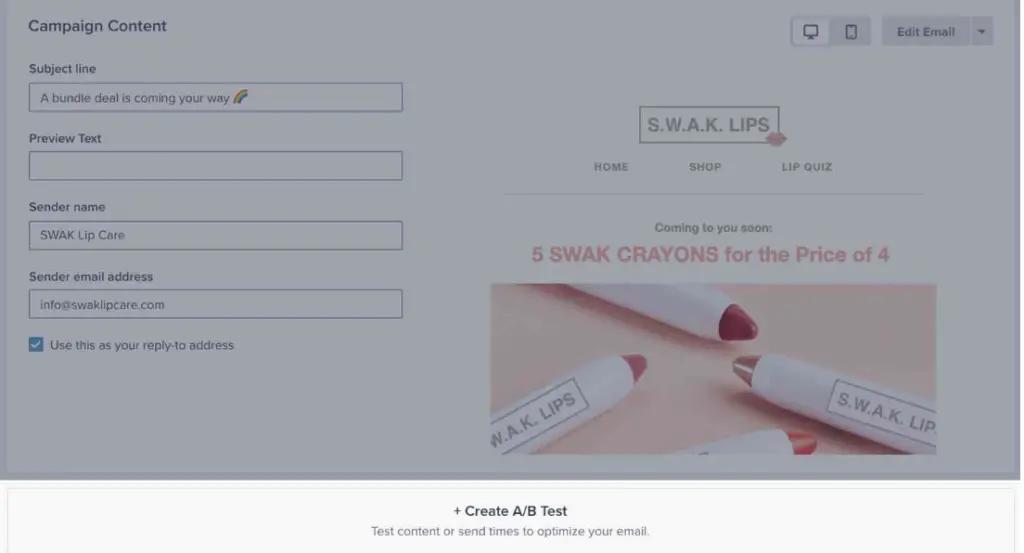

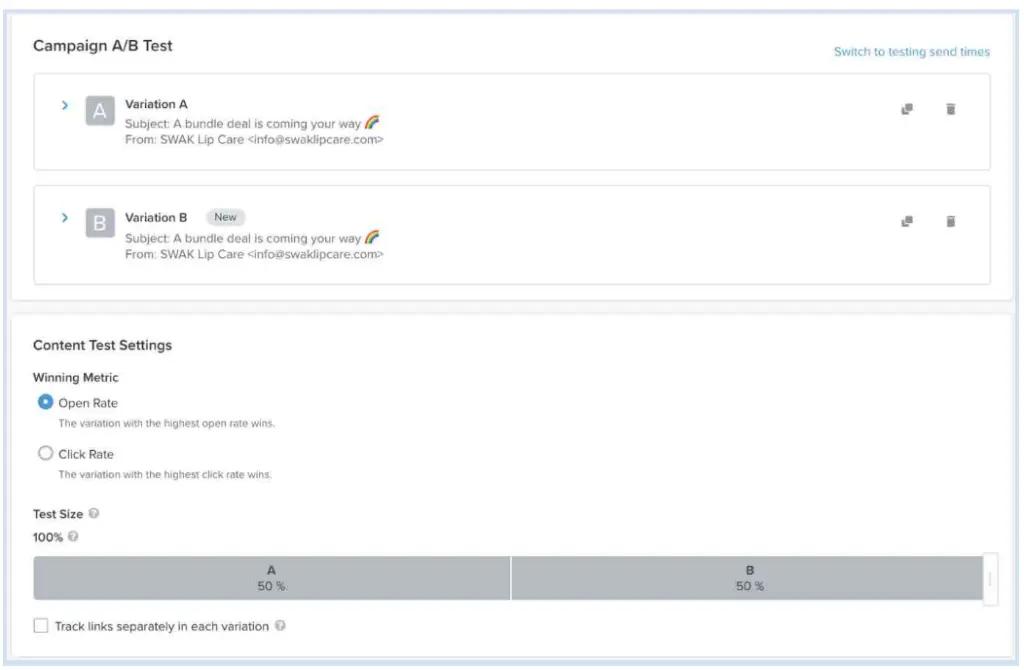

When you create an email campaign using Klaviyo, you can also set up an A/B test. Here are the steps to A/B test campaign emails:

- Step 1: Create an email design and select your target segment.

- Step 2: After saving the campaign content, click on “Create A/B Test.”

- Step 3: Add the details of the variations you want to test.

- Step 4: Choose a winning metric.

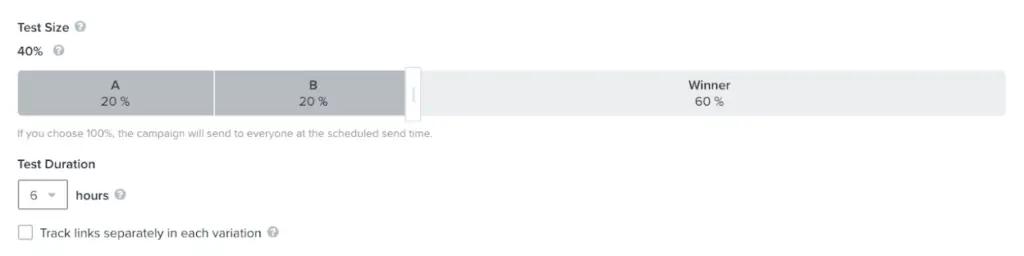

- Step 5: Choose the test size and duration. Klaviyo will suggest a smart test size based on your campaign.

- Step 6: Review your A/B test result.

Note: Unlike email flow A/B testing, you can test send timing and email content variations in email campaigns testing.

Even more types of A/B email testing

In A/B testing, you vary just one element of an email, website, or SMS. If you want to go further, you may try the following tests:

- Multivariate testing

- Champion vs. challenger

- Hold-out testing

Multivariate testing

Unlike standard A/B testing, multivariate testing is designed to test how multiple variants work together toward a specific goal. In other words, it helps you find the winning combinations for your email marketing.

Champion vs. challenger

This test is similar to A/B testing—you can test 2+ variations of a single email element.

What makes champion vs. challenger testing different is you keep testing the winner (champion) against a new variation for a predetermined period instead of settling for one round of testing.

Hold-out testing

Hold-out testing is a method to measure the long-term performance of an A/B test winner. Typically, after you finish an A/B test, you send every recipient the winning variation of the email. In hold-out, you keep sending the original email to your control group.

That helps you understand if the A/B test result was skewed and its long-term effects.

A/B testing SMS campaigns

A/B testing SMS messages along with emails will help boost your marketing campaigns. Klaviyo helps you A/B test your SMS messages by varying elements like:

- SMS copy

- Emojis

- Images/gifs

- CTA

- Send time

Final thoughts: a comprehensive guide for email A/B testing

A/B testing emails helps you identify the elements that most influence engagement. During A/B testing, you experiment with 2+ variations of an email attribute—subject lines, CTAs, layouts, and images, among others.

To ensure your email A/B testing is successful, it’s best to follow the practices we listed and support them with email marketing platforms like Klaviyo.

Klaviyo helps A/B test your email flows and campaigns and provides a mathematical analysis of the test results. If you’re ready to take advantage of A/B testing and other myriad features, check out Klaviyo’s email marketing solutions today.

Explore all that Klaviyo has to offer.